Apple researchers have developed an AI model that dramatically improves extremely dark photos by integrating a diffusion-based image model directly into the camera’s image processing pipeline, allowing it to recover detail from raw sensor data that would normally be lost. Here’s how they did it.

The problem with extreme low-light photos

You’ve probably taken a photo in very dark conditions, which resulted in an image that was filled with grainy, digital noise.

This happens when the image sensor doesn’t capture enough light.

To try to make up for this, companies like Apple have been applying image processing algorithms that have been criticized for creating overly smooth, oil-painting-like effects, where fine detail disappears or gets reconstructed into something barely recognizable or readable.

Enter DarkDiff

To tackle this problem, researchers from Apple and Purdue University have developed a model called DarkDiff. Here’s how they present it in a study called DarkDiff: Advancing Low-Light Raw Enhancement by Retasking Diffusion Models for Camera ISP:

High-quality photography in extreme low-light conditions is challenging but impactful for digital cameras. With advanced computing hardware, traditional camera image signal processor (ISP) algorithms are gradually being replaced by efficient deep networks that enhance noisy raw images more intelligently. However, existing regression-based models often minimize pixel errors and result in oversmoothing of low-light photos or deep shadows. Recent work has attempted to address this limitation by training a diffusion model from scratch, yet those models still struggle to recover sharp image details and accurate colors. We introduce a novel framework to enhance low-light raw images by retasking pre-trained generative diffusion models with the camera ISP. Extensive experiments demonstrate that our method outperforms the state-of-the-art in perceptual quality across three challenging low-light raw image benchmarks.

In other words, rather than applying AI in the post-processing stage, they retasked Stable Diffusion (an open-source model trained on millions of images) to understand what details should exist in dark areas of photos considering their overall context, and integrated it into the image signal processing pipeline.

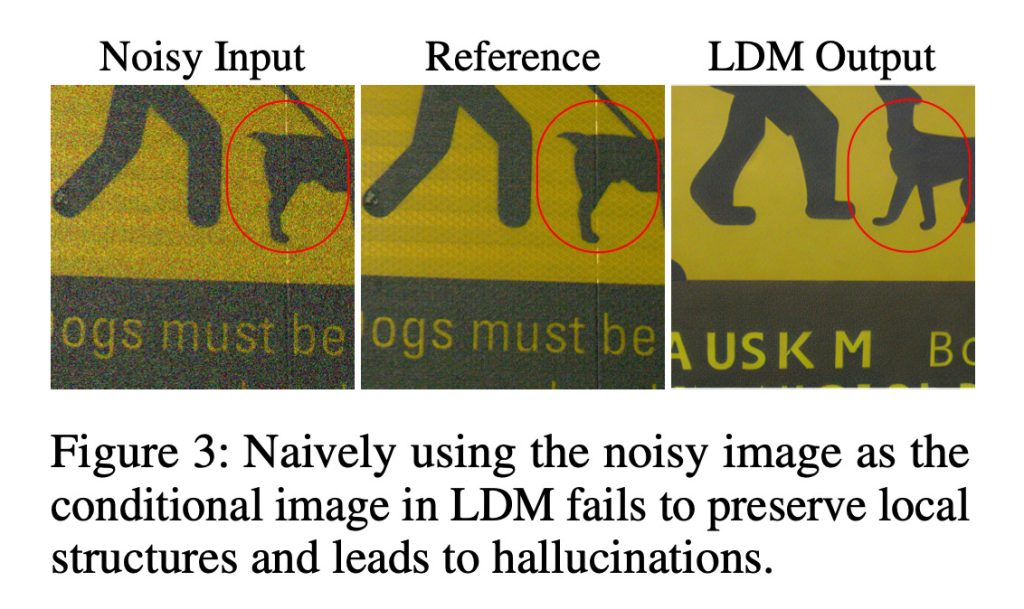

In fact, their approach introduces a mechanism that computes attention over localized image patches, which helps preserve local structures and mitigates hallucinations like in the image below, where the reconstruction AI changes image content entirely.

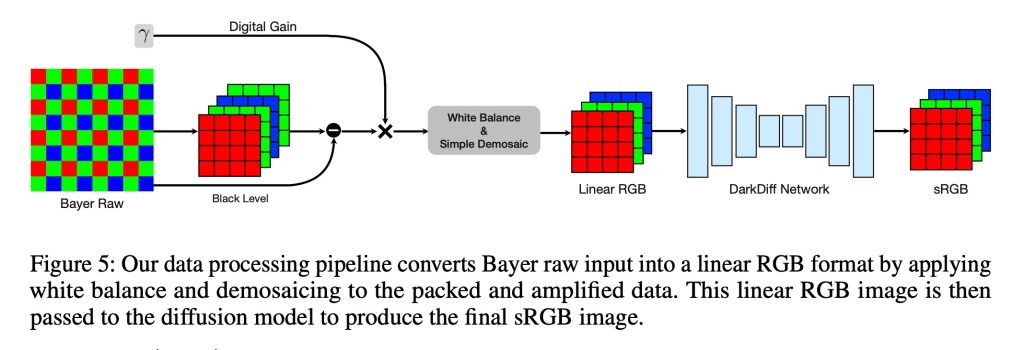

With this approach, the camera’s ISP still handles the early processing needed to make sense of the raw sensor data, including steps like white balance and demosaicing. DarkDiff then operates on this linear RGB image, denoising it and directly producing the final sRGB image.

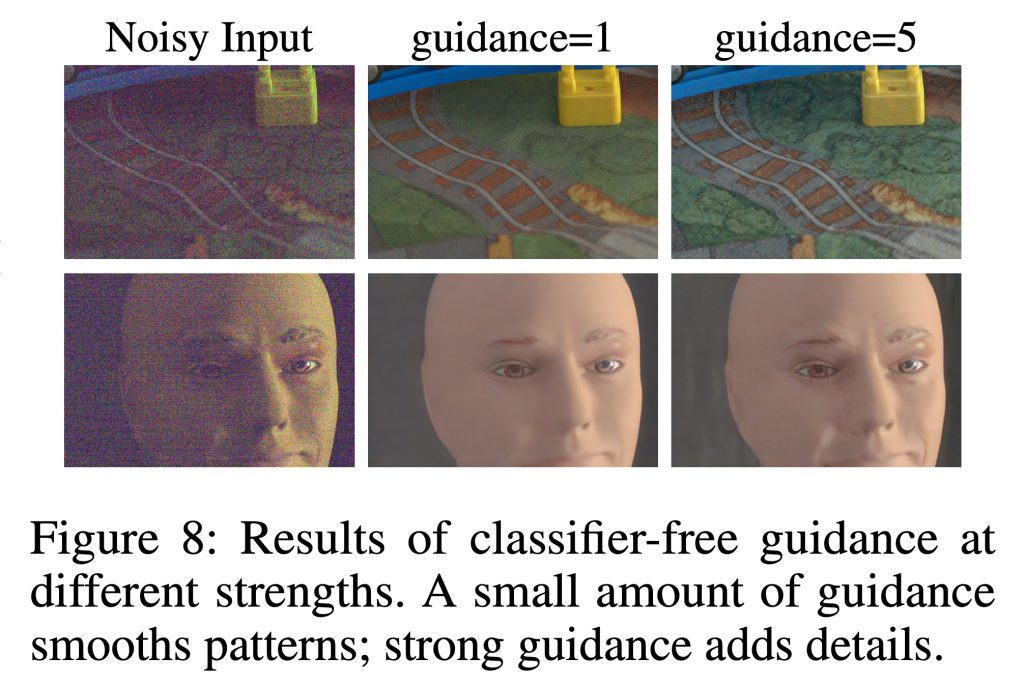

DarkDiff also uses a standard diffusion technique called classifier-free guidance, which basically controls how strongly the model should follow the input image, versus its learned visual priors.

With lower guidance, the model produces smoother patterns, while increasing guidance encourages it to generate sharper textures and finer detail (which in turn, also raises the risk of producing unwanted artifacts or hallucinated content).

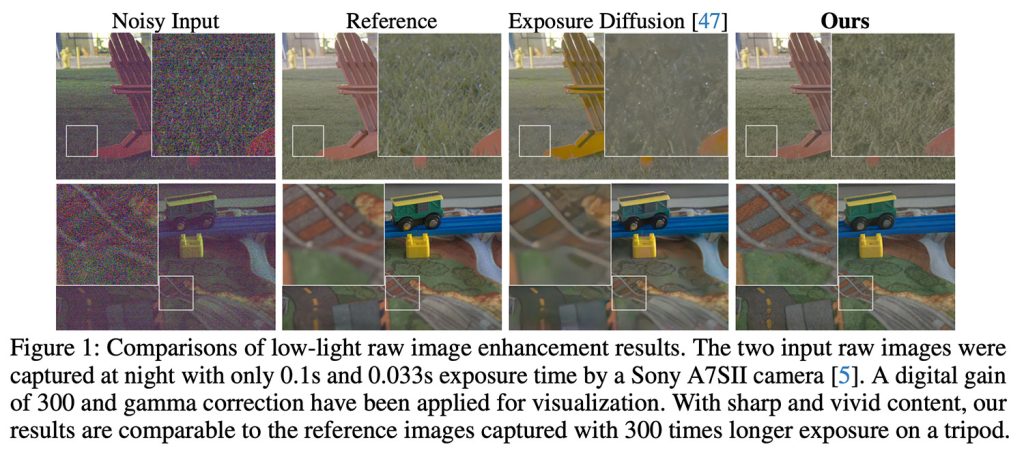

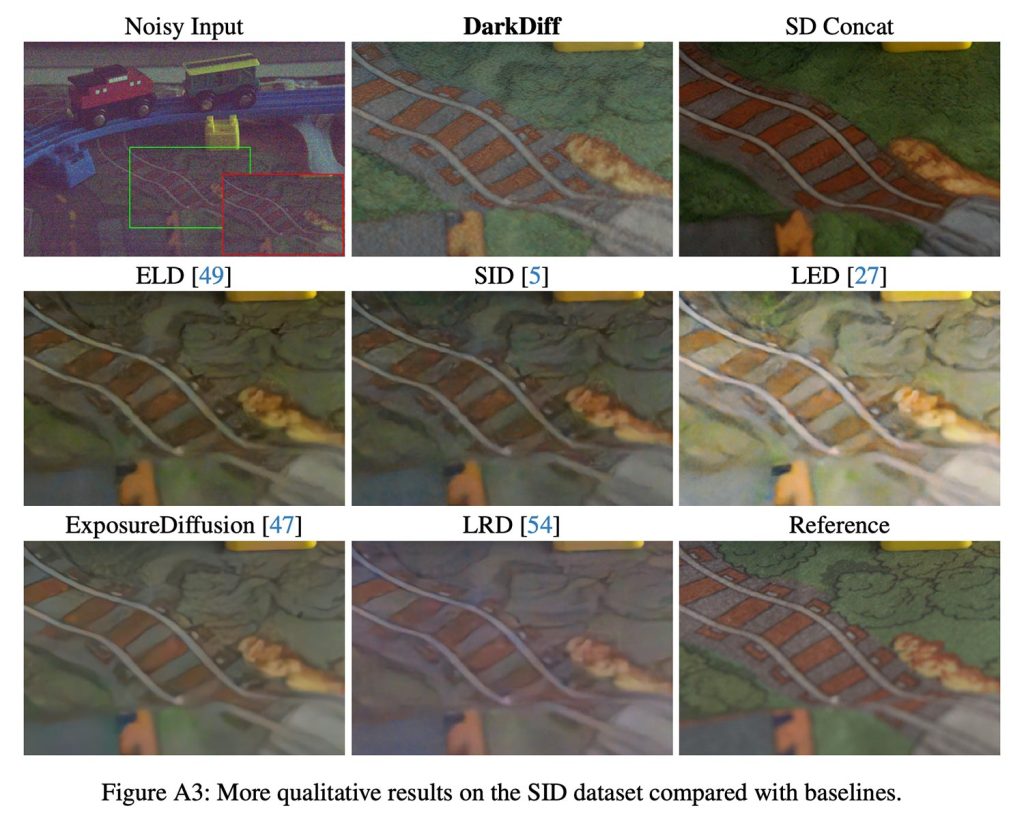

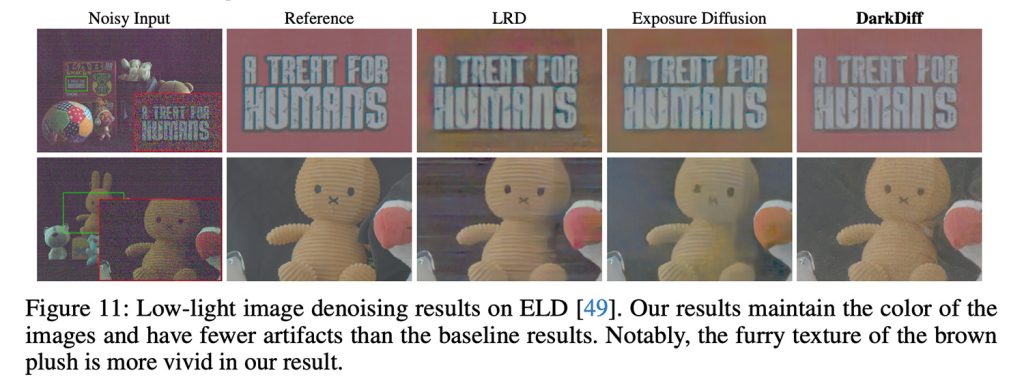

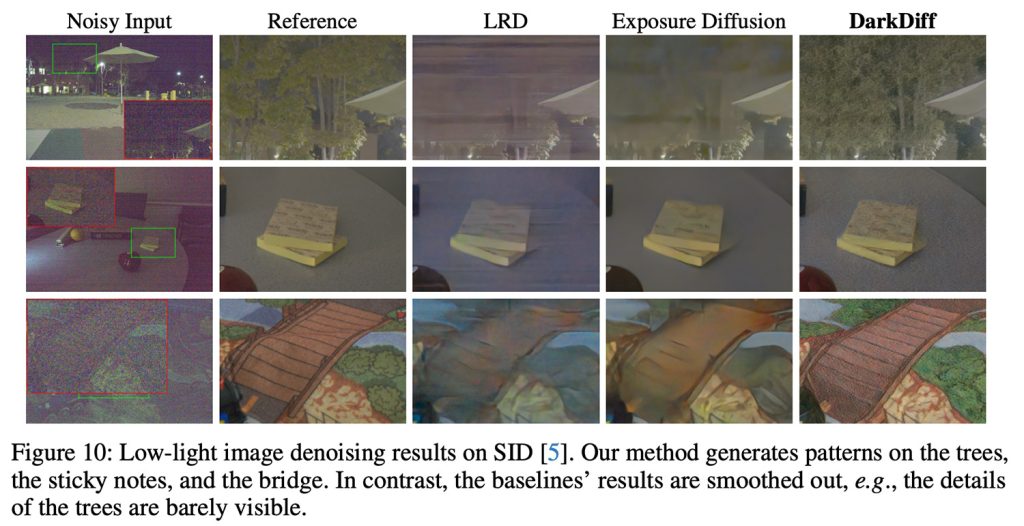

To test out DarkDiff, the researchers used real photos taken in extremely low light with cameras such as the Sony A7SII, comparing the results against other raw enhancement models and diffusion-based baselines, including ExposureDiffusion.

The test images were captured at night with exposure times as short as 0.033 seconds, and DarkDiff’s enhanced versions were compared against reference photos captured with 300 times longer exposure on a tripod.

Here are some of the results (which we encourage you to see in full quality in the original study):

DarkDiff is not without its issues

The researchers note that their AI-based processing is significantly slower than traditional methods, and would likely require cloud processing to make up for the high computational requirements that would quickly drain battery if run locally on a phone. In addition to that, they also note limitations with non-English text recognition in low-light scenes.

It is also important to note that nowhere in the study is it suggested that DarkDiff will make its way to iPhones anytime soon. Still, the work demonstrates Apple’s continued focus on advancements in computational photography.

In recent years, this has become an increasingly important area of interest in the entire smartphone market, as customers demand camera capabilities that exceed what companies can physically fit inside their devices.

To read the full study and check out additional comparisons between DarkDiff and other denoising methods, follow this link.

Accessory deals on Amazon

- AirPods Pro 3 (currently just $199!)

- Beats USB-C to USB-C Woven Short Cable

- Wireless CarPlay adapter

- Logitech MX Master 4

- Apple AirTag 4 Pack

FTC: We use income earning auto affiliate links. More.

Comments